The design of I/O buses represents the computer arteries and significantly determines how much and how quickly data can be exchanged between the single components listed above. The top category is led by components used in the field of High Performance Computing (HPC). As of mid-2020, among the contemporary representatives of HPC are Nvidia Tesla and DGX, Radeon Instinct, and Intel Xeon Phi GPU-based accelerator products (see [1,2] for product comparisons).

Understanding NUMA

Non-Uniform Memory Access (NUMA) describes a shared memory architecture used in contemporary multiprocessing systems. NUMA is a computing system composed of several single nodes in such a way that the aggregate memory is shared between all nodes: “each CPU is assigned its own local memory and can access memory from other CPUs in the system” [12,7].

NUMA is a clever system used for connecting multiple central processing units (CPU) to any amount of computer memory available on the computer. The single NUMA nodes are connected over a scalable network (I/O bus) such that a CPU can systematically access memory associated with other NUMA nodes.

Local memory is the memory that the CPU is using in a particular NUMA node. Foreign or remote memory is the memory that a CPU is taking from another NUMA node. The term NUMA ratio describes the ratio of the cost of accessing foreign memory to the cost of accessing local memory. The greater the ratio, the greater the cost, and thus the longer it takes to access the memory.

However, it takes longer than when that CPU is accessing its own local memory. Local memory access is a major advantage, as it combines low latency with high bandwidth. In contrast, accessing memory belonging to any other CPU has higher latency and lower bandwidth performance.

Looking Back: Evolution of Shared-Memory Multiprocessors

Frank Dennemann [8] states that modern system architectures do not allow truly Uniform Memory Access (UMA), even though these systems are specifically designed for that purpose. Simply speaking, the idea of parallel computing was to have a group of processors that cooperate to compute a given task, thereby speeding up an otherwise classical sequential computation.

As explained by Frank Dennemann [8], in the early 1970s, “the need for systems that could service multiple concurrent user operations and excessive data generation became mainstream” with the introduction of relational database systems. “Despite the impressive rate of uniprocessor performance, multiprocessor systems were better equipped to handle this workload. To provide a cost-effective system, shared memory address space became the focus of research. Early on, systems using a crossbar switch were advocated, however with this design complexity scaled along with the increase of processors, which made the bus-based system more attractive. Processors in a bus system [can] access the entire memory space by sending requests on the bus, a very cost-effective way to use the available memory as optimally as possible.”

However, bus-based computer systems come with a bottleneck - the limited amount of bandwidth that leads to scalability problems. The more CPUs that are added to the system, the less bandwidth per node available. Furthermore, the more CPUs that are added, the longer the bus, and the higher the latency as a result.

Most CPUs were constructed in a two-dimensional plane. CPUs also had to have integrated memory controllers added. The simple solution of having four memory buses (top, bottom, left, right) to each CPU core allowed full available bandwidth, but that goes only so far. CPUs stagnated with four cores for a considerable time. Adding traces above and below allowed direct buses across to the diagonally opposed CPUs as chips became 3D. Placing a four-cored CPU on a card, which then connected to a bus, was the next logical step.

Today, each processor contains many cores with a shared on-chip cache and an off-chip memory and has variable memory access costs across different parts of the memory within a server.

Improving the efficiency of data access is one of the main goals of contemporary CPU design. Each CPU core was endowed with a small level one cache (32 KB) and a larger (256 KB) level 2 cache. The various cores would later share a level 3 cache of several MB, the size of which has grown considerably over time.

To avoid cache misses - requesting data that is not in the cache - a lot of research time is spent on finding the right number of CPU caches, caching structures, and corresponding algorithms. See [8] for a more detailed explanation of the protocol for caching snoop [4] and cache coherency [3,5], as well as the design ideas behind NUMA.

Software Support for NUMA

There are two software optimization measures that may improve the performance of a system supporting NUMA architecture - processor affinity and data placement. As explained in [19], “processor affinity [… ] enables the binding and unbinding of a process or a thread to a single CPU, or a range of CPUs so that the process or thread will execute only on the designated CPU or CPUs rather than any CPU.” The term “data placement” refers to software modifications in which code and data are kept as close as possible in memory.

The different UNIX and UNIX-related operating systems support NUMA in the following ways (the list below is taken from [14]):

- Silicon Graphics IRIX support for ccNUMA architecture over 1240 CPU with Origin server series.

- Microsoft Windows 7 and Windows Server 2008 R2 added support for NUMA architecture over 64 logical cores.

- Version 2.5 of the Linux kernel already contained basic NUMA support, which was further improved in subsequent kernel releases. Version 3.8 of the Linux kernel brought a new NUMA foundation that allowed for the development of more efficient NUMA policies in later kernel releases [13]. Version 3.13 of the Linux kernel brought numerous policies that aim at putting a process near its memory, together with the handling of cases, such as having memory pages shared between processes, or the use of transparent huge pages; new system control settings allow NUMA balancing to be enabled or disabled, as well as the configuration of various NUMA memory balancing parameters [15].

- Both Oracle and OpenSolaris model NUMA architecture with the introduction of logical groups.

- FreeBSD added Initial NUMA affinity and policy configuration in version 11.0.

In the book “Computer Science and Technology, Proceedings of the International Conference (CST2016)” Ning Cai suggests that the study of NUMA architecture was mainly focused on the high-end computing environment and proposed NUMA-aware Radix Partitioning (NaRP), which optimizes the performance of shared caches in NUMA nodes to accelerate business intelligence applications. As such, NUMA represents a middle ground between shared memory (SMP) systems with a few processors [6].

NUMA and Linux

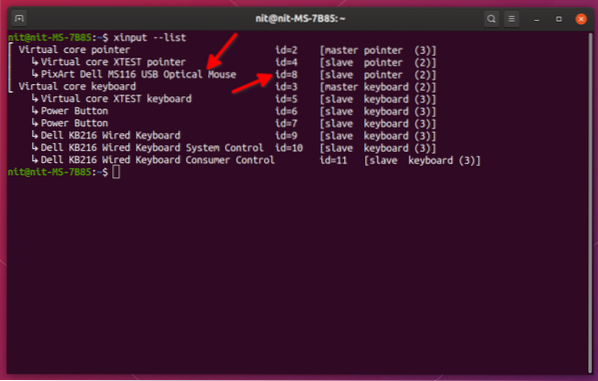

As stated above, the Linux kernel has supported NUMA since version 2.5. Both Debian GNU/Linux and Ubuntu offer NUMA support for process optimization with the two software packages numactl [16] and numad [17]. With the help of the numactl command, you can list the inventory of available NUMA nodes in your system [18]:

# numactl --hardwareavailable: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 16 17 18 19 20 21 22 23

node 0 size: 8157 MB

node 0 free: 88 MB

node 1 cpus: 8 9 10 11 12 13 14 15 24 25 26 27 28 29 30 31

node 1 size: 8191 MB

node 1 free: 5176 MB

node distances:

node 0 1

0: 10 20

1: 20 10

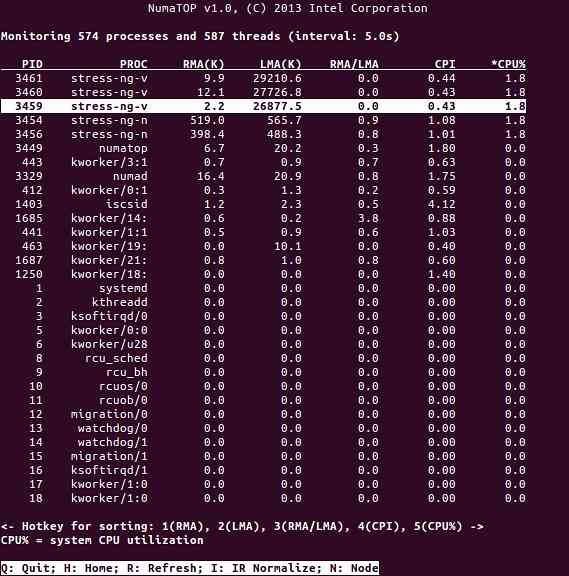

NumaTop is a useful tool developed by Intel for monitoring runtime memory locality and analyzing processes in NUMA systems [10,11]. The tool can identify potential NUMA-related performance bottlenecks and hence help to re-balance memory/CPU allocations to maximise the potential of a NUMA system. See [9] for a more detailed description.

Usage Scenarios

Computers that support NUMA technology allow all CPUs to access the entire memory directly - the CPUs see this as a single, linear address space. This leads to more efficient use of the 64-bit addressing scheme, resulting in faster movement of data, less replication of data, and easier programming.

NUMA systems are quite attractive for server-side applications, such as data mining and decision support systems. Furthermore, writing applications for gaming and high-performance software becomes much easier with this architecture.

Conclusion

In conclusion, NUMA architecture addresses scalability, which is one of its main benefits. In a NUMA CPU, one node will have a higher bandwidth or lower latency to access the memory on that same node (e.g., the local CPU requests memory access at the same time as the remote access; the priority is on the local CPU). This will dramatically improve memory throughput if the data are localized to specific processes (and thus processors). The disadvantages are the higher costs of moving data from one processor to another. As long as this case does not happen too often, a NUMA system will outperform systems with a more traditional architecture.

Links and References

- Compare NVIDIA Tesla vs. Radeon Instinct, https://www.itcentralstation.com/products/comparisons/nvidia-tesla_vs_radeon-instinct

- Compare NVIDIA DGX-1 vs. Radeon Instinct, https://www.itcentralstation.com/products/comparisons/nvidia-dgx-1_vs_radeon-instinct

- Cache coherence, Wikipedia, https://en.wikipedia.org/wiki/Cache_coherence

- Bus snooping, Wikipedia, https://en.wikipedia.org/wiki/Bus_snooping

- Cache coherence protocols in multiprocessor systems, Geeks for geeks, https://www.geeksforgeeks.org/cache-coherence-protocols-in-multiprocessor-system/

- Computer science and technology - Proceedings of the International Conference (CST2016), Ning Cai (Ed.), World Scientific Publishing Co Pte Ltd, ISBN: 9789813146419

- Daniel P. Bovet and Marco Cesati: Understanding NUMA architecture in Understanding the Linux Kernel, 3rd edition, O'Reilly, https://www.oreilly.com/library/view/understanding-the-linux/0596005652/

- Frank Dennemann: NUMA Deep Dive Part 1: From UMA to NUMA, https://frankdenneman.nl/2016/07/07/numa-deep-dive-part-1-uma-numa/

- Colin Ian King: NumaTop: A NUMA system monitoring tool, http://smackerelofopinion.blogspot.com/2015/09/numatop-numa-system-monitoring-tool.html

- Numatop, https://github.com/intel/numatop

- Package numatop for Debian GNU/Linux, https://packages.debian.org/buster/numatop

- Jonathan Kehayias: Understanding Non-Uniform Memory Access/Architectures (NUMA), https://www.sqlskills.com/blogs/jonathan/understanding-non-uniform-memory-accessarchitectures-numa/

- Linux Kernel News for Kernel 3.8, https://kernelnewbies.org/Linux_3.8

- Non-uniform memory access (NUMA), Wikipedia, https://en.wikipedia.org/wiki/Non-uniform_memory_access

- Linux Memory Management Documentation, NUMA, https://www.kernel.org/doc/html/latest/vm/numa.html

- Package numactl for Debian GNU/Linux, https://packages.debian.org/sid/admin/numactl

- Package numad for Debian GNU/Linux, https://packages.debian.org/buster/numad

- How to find if NUMA configuration is enabled or disabled?, https://www.thegeekdiary.com/centos-rhel-how-to-find-if-numa-configuration-is-enabled-or-disabled/

- Processor affinity, Wikipedia, https://en.wikipedia.org/wiki/Processor_affinity

Thank You

The authors would like to thank Gerold Rupprecht for his support while preparing this article.

About the Authors

Plaxedes Nehanda is a multiskilled, self-driven versatile person who wears many hats, among them, an events planner, a virtual assistant, a transcriber, as well as an avid researcher, based in Johannesburg, South Africa.

Prince K. Nehanda is an Instrumentation and Control (Metrology) Engineer at Paeflow Metering in Harare, Zimbabwe.

Frank Hofmann works on the road - preferably from Berlin (Germany), Geneva (Switzerland), and Cape Town (South Africa) - as a developer, trainer, and author for magazines like Linux-User and Linux Magazine. He is also the co-author of the Debian package management book (http://www.dpmb.org).

Phenquestions

Phenquestions